|

|

|

home > technology > general faq home > technology > general faq

This FAQ answers basic questions about Visual Search and eVe functionality and features:

What is a Visual Search Engine?

A visual search engine enables users to search and find visual information contained in images, graphics, videos, etc. There are two approaches to retrieving visual information: text-based and content-based. Text-based search engines rely on manual annotation of the images and videos. Content-based or Visual Search engines rely on visual properties such as color, texture, shape, etc which are automatically extracted from the images and videos based on image processing & pattern recognition techniques.

Nearly every Web or Digital Asset Management (DAM) user knows what it's like to search the Internet or a database using text-based search and be deluged by dozens of irrelevant results. For example, a recent search on Altavista.com for "Angels" turned up links to Web sites for Charlie's Angels, the Blue Angels, Angel perfume, the Guardian Angels, the neurogenetic disorder Angelman's syndrome, Vanessa Angel, a schedule for the Anaheim Angels baseball team Angel fish, and references to the biblical Angel.

Text-based search engines or DAM software offer image searches that comb the data based on the text file names attached to pictures. It's an adequate way to search if the pictures are tagged correctly and the user knows exactly what keywords to input.

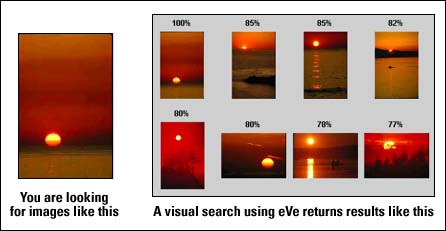

A visual search in contrast, looks inside the actual image and uses mathematical algorithms that analyze color, shape and texture to call up more accurate results. Users can select a query image, such as the image of a sunset, and then "Zero In" their search to find similar pictures, either by color, shape, texture or object.

Why is Visual Search so significant?

Beside giving users more accurate search capabilities:

- Visual Search is cognitively faster than a text search - up to 200 times faster than reading text

- Visual Search lets you search on multiple concurrent meanings - images convey context, multivariate data, meaning

- Visual Search lets you "Zero In" on a target - in a few clicks you can refine your search for "More like this, not like that"

- Visual Search presents no cultural or language barriers - text independence means it can be used for immediate international or cultural adoption

What is eVe?

eVe™ (eVision Visual Engine) is a content-based visual information search and retrieval engine that can be seamlessly integrated into and extend the functionality of text-based visual search engines. eVe analyzes images and extracts their visual features for comparison to other images. The eVe toolkit provides a scalable infrastructure to acquire, process and manage visual assets with intuitive and customizable user interfaces. It can be deployed on the Internet or corporate nets.

Using eVe, creators and users of media can enable their customers to rapidly find the desired target products (for an eBusiness) or assets (for media asset management) using visual media and fewer clicks. Specifically, eVe increases search precision by enabling initiation and search for products based on desired visual features and Visual Vocabulary – a new paradigm in search technology.

How does eVe work?

eVe™ (eVision Visual engine) is an advanced Visual Search engine that includes analysis, storage, indexing and search/retrieval of images and video.

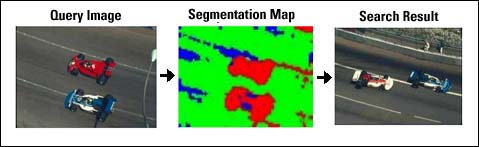

The core principle of eVision’s technology solution,

differentiating it from all existing commercial visual

search solutions, is that an image is not a mere conglomeration

of pixels, but is a collection of pixels that form visual

groups that are characterized as objects by humans.

Once these object regions are identified, features such

as color, texture and shape are extracted for each of

the object regions. This gives the unprecedented ability

to search both at an image level and at an object level.

The eVe image analysis algorithms automatically segment

an image into distinct objects, which correspond approximately

to objects or parts of objects in an image. It then

generates scale-independent descriptions of the color,

shape, texture and object of those regions, known as

visual signatures. These visual signatures are organized

in a proprietary indexing scheme for extremely fast

comparisons to other images.

What are the differences

between searching on color, shape, texture, and region?

When you search for images, you can compare them based on any combination of their properties: color, shape, texture, and object. For example, if you had a collection of pictures of handbags, you could retrieve all the red bags, or all bags of a particular shape, or all plaid-patterned bags of a certain shape.

Color: This is the easiest type of search to understand. It matches the predominant colors in the source and target images. The more colors the objects in the images have in common, and the more similar the proportions of those colors, the higher the similarity score.

Shape: This type of search uses the two-dimensional outlines of objects within the image as patterns to match in the target image. The closer the shapes are, the higher the target images' similarity score.

Texture: A texture search identifies unique patterns of light and dark pixels within objects in the image, and then ranks the target images based on their similarity to that texture.

Region: In a region search, eVe models the color surface of the object. This essentially gives a 3D shading representation of each object in the image.

Does eVe work for video as well as images?

Yes, since this technology deals with the signal content at a fundamental level, it can be applied to search audio content, video content and any other digital pattern, such as seismographic data. . In the near future, the eVe SDK will be capable of extracting keyframes from videos, index them, and then search. Once a keyframe image is found within a video, the video can be played from that keyframe.

How is eVe unique from other visual search engines?

eVe's uniqueness comes from its ability to automatically segment an image into relevant object regions and generate signatures that capture the color, texture, shape, and object patterns. The ability to segment enables whole and partial image searches with unparalleled search accuracy.

What is Visual Vocabulary?

Visual Vocabulary™ is an automatic way to classify visually similar images and use one image to represent a group of similar images. In other words, hundreds of thousands of images can automatically be distilled down to a representative set of images that can be used to quickly search the entire inventory of images. This set of representative images is referred to as a Visual Vocabulary.

For example, current online stores have textual drill down categories such as sweaters, pants, shoes and handbags. These categories are used to help narrow a user's search process but can often be frustrating-particularly when a user inadvertently misspells a word or if a category is incorrectly tagged.

Visual Vocabulary eliminates these "human" errors. Instead of relying on text categories, Visual Vocabulary visually represents each group of merchandise-sweaters, pants, shoes and handbags-thereby creating a more fulfilling and less cumbersome shopping experience.

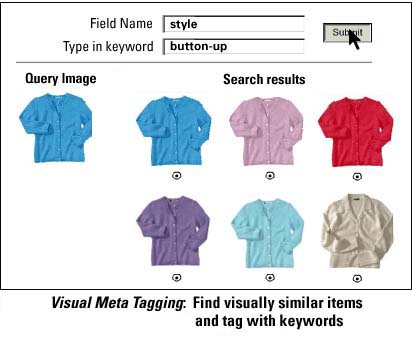

What is Visual Meta Tagging?

Traditionally when you catalog images by attaching keywords, you open up each image one by one and determine which keywords are appropriate. For thousands of images, this can be a time-consuming and error prone task.

Visual Meta Tagging™ significantly reduces the efforts

of meta tagging image assets at the time of ingestion.

Visual Meta Tagging organizes image data into relevant

groups using visual content of images. The representative

of each of these groups constitute the Visual Vocabulary.

Text tags can be associated with each Visual Vocabulary

item which then can be applied to a selected group of

images classified under that item. Alternatively, a search

can be performed with an image and a selected group of

the retrieved results can be "mass-tagged" with

a user-defined keyword set.

Can I use eVe on my web site?

eVe is currently available for use by software developers. For these developers an eVe license needs to be obtained from eVision for production development and implementation.

|

|

|